Downloading datasets on AWS

Downloading Data Requiring Authentication on Remote Servers (AWS)

If you happen to be working on huge datasets for Big Data or Machine Learning where the genome or image database is huge ( > 4 GB) and the data is available on a website only after user authentication, there are a lot of options to ponder over.

Downloading the entire data locally and uploading again using scp might not be a really good idea. A minimalist yet fully functional browser-in-the-terminal or a direct download link is the way to go.

Lynx

Lynx is a minimalistic in-terminal browser. Of all the ones available Lynx is the best in my opinion.

Installation

$ sudo apt-get install lynx

#=> Install Lynx

$ lynx www.google.com

#=> launch google on lynx Downloading Data

- On launching

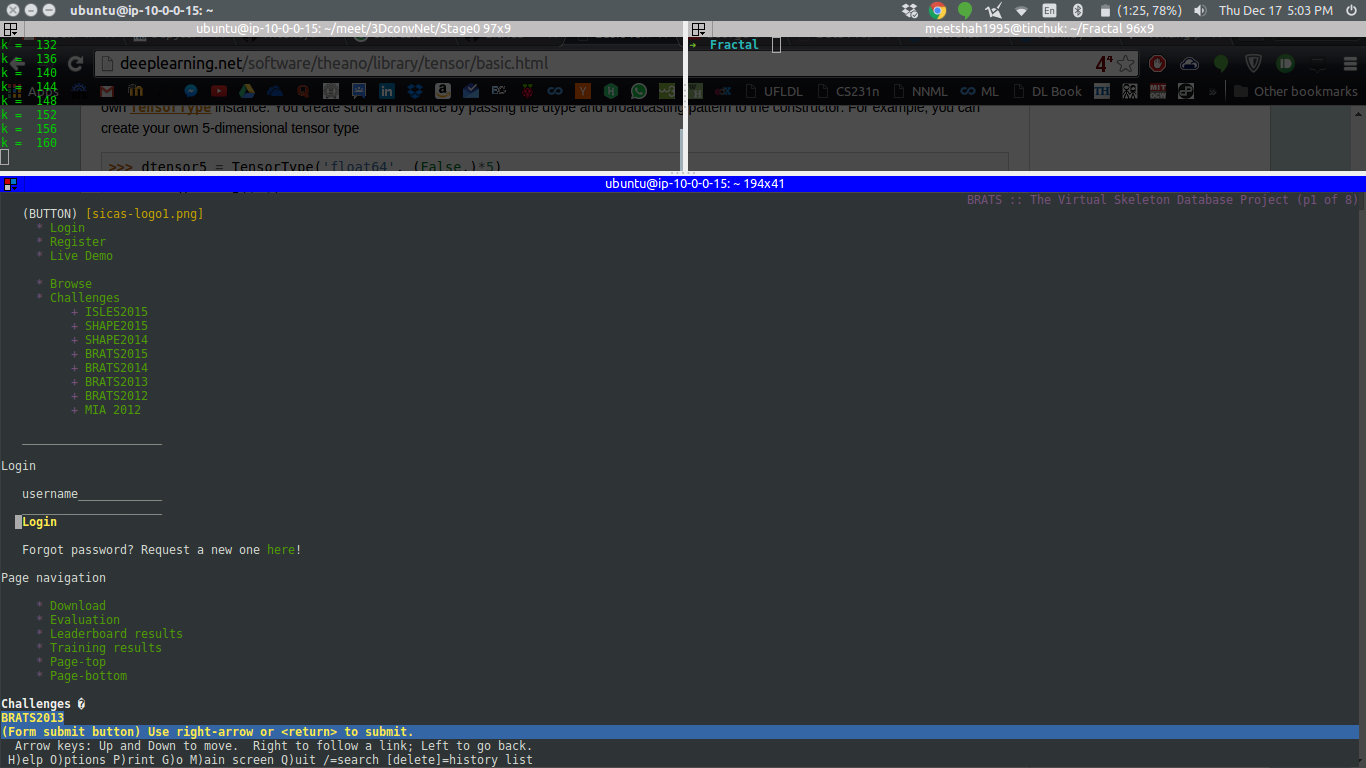

lynxto a login page of the website you can see a similar screen. Just put your credentials where needed and there you go.

Exporting Cookies

You can also export your cookies (well not the chocolate chip ones) into a text file using a Chrome extension and then ask wget to load them for you to retrieve the data.

- Copy the cookies into a file

cookies.txt - Copy the file to AWS

- Download the data using the following command :

wget -x --load-cookies cookies.txt -P data -nH --cut-dirs=5 http://www.kaggle.com/c/mnist/download/test1.zipCopy as cURL

Turns out there is a way in which you can copy the entire request url that Chrome makes to the website as a curl command. To do this :

- Simply go to the website with the data URL.

- Send a request to download the data on Chrome.

- Open up the developer-console

Ctrl + Shift + Cand go to the Network tab. - You will see a request with the name as the title of the compressed data file.

- Right click it and go to

Copy as -> Copy as cURL - Paste the contents on AWS.